Digital marketers and SEO professionals know how important search engine indexing is. This is exactly why they do their best to help Google crawl and index their sites properly, investing time and resources into on-page and off-page optimization such as content, links, tags, meta descriptions, image optimization, website structure, and so on.

But, while there’s no denying that high-class website optimization is fundamental to success in search, forgetting about the technical part of SEO can be a serious mistake. If you have never heard about robots.txt, meta robots tags, XML sitemaps, microformats, and X-Robot tags, you might be in trouble.

Don’t get panicky, though. In this article, I’ll explain how to use and set up robots.txt and meta robots tags. I’m going to provide several practical examples as well. Let’s start!

What Is Robots.txt?

Robots.txt is a text file that is used to instruct search engine bots (also known as crawlers, robots, or spiders) how to crawl and index website pages. Ideally, a robots.txt file is placed in the top-level directory of your website so that robots can access its instructions right away.

To communicate commands to different types of search crawlers, a robots.txt file has to follow specific standards featured in the Robots exclusion protocol (REP), which was created back in 1994 and then substantially extended in 1996, 1997, and 2005.

Throughout their history, robots.txt files have been gradually improving to support specific crawler directives, URI-pattern extensions, indexer directives (also known as REP tags or robots meta tags), and the microformat rel=“nofollow.”

Because robots.txt files provide search bots with directions on how to crawl or how not to crawl this or that part of the website, knowing how to use and set up these files is pretty important. If a robots.txt file is set up incorrectly, it can cause multiple indexing mistakes. So, every time you start a new SEO campaign, check your robots.txt file with Google’s robots texting tool.

Don’t forget: If everything is right, a robots.txt file will speed up the indexing process.

What to Hide With Robots.txt

Robots.txt files can be used to exclude certain directories, categories, and pages from search. For that end, use the “disallow” directive.

Here are some pages you should hide using a robots.txt file:

- Pages with duplicate content

- Pagination pages

- Dynamic product and service pages

- Account pages

- Admin pages

- Shopping cart

- Chats

- Thank-you pages

Basically, it looks like this:

In the example above, I instruct Googlebot to avoid crawling and indexing all pages related to user accounts, cart, and multiple dynamic pages that are generated when users look for products in the search bar or sort them by price, and so on.

Yet, don’t forget that any robots.txt file is publicly available on the web. To access a robots.txt file, simply type:

www.website-example.com/robots.txt

This availability means that you can’t secure or hide any data within it. Moreover, bad robots and malicious crawlers can take advantage of a robots.txt file, using it as a detailed map to navigate your most valuable web pages.

Also, keep in mind that robots.txt commands are actually directives. What this means is that search bots can crawl and index your site even if you instruct them not to. The good news is, most search engines (like Google, Bing, Yahoo, and Yandex) honor robots.txt directives.

Robots.txt files definitely have drawbacks. Nonetheless, I strongly recommend that you make them an integral part of every SEO campaign. Google recognizes and honors robots.txt directives and, in most cases, having Google under your belt is more than enough.

How to Use Robots.txt

Robots.txt files are pretty flexible and can be used in many ways. Their main benefit, however, is that they enable SEO experts to “allow” or “disallow” multiple pages at once without having to access the code of every page, one by one.

For example, you can block all search crawlers from content. Like this:

User-agent: * Disallow: /

Or hide your site’s directory structure and specific categories, like this:

User-agent: * Disallow: /no-index/

It’s also useful to exclude multiple pages from search. Just parse URLs you want to hide from search crawlers. Then, add the “disallow” command in your robots.txt, list the URLs and, voila! — the pages are no longer visible to Google.

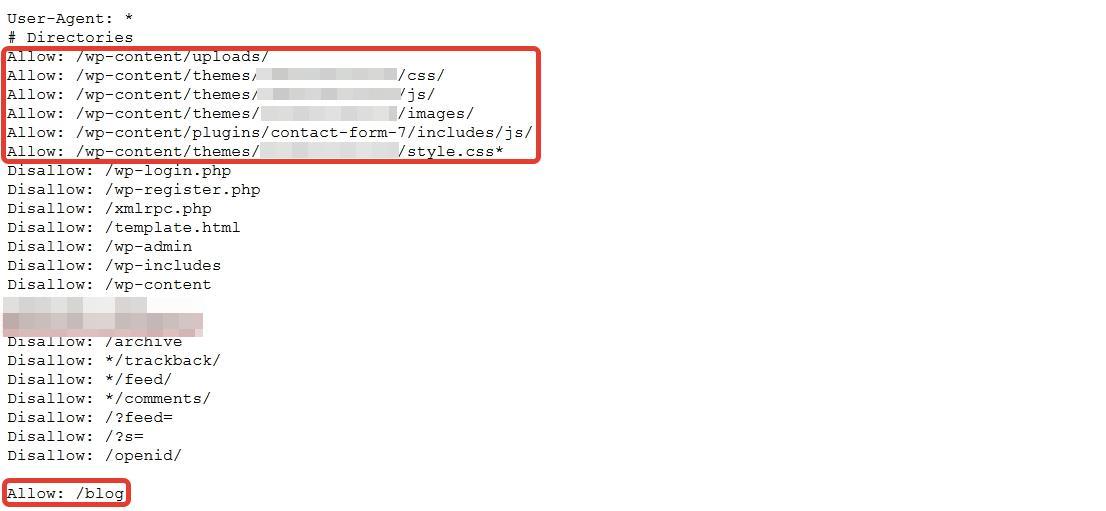

What’s more important, though, is that a robots.txt file allows you to prioritize certain pages, categories, and even bits of CSS and JS code. Have a look at the example below:

Here, we have disallowed WordPress pages and specific categories, but wp-content files, JS plugins, CSS styles, and blog are allowed. This approach guarantees that spiders crawl and index useful code and categories, firsthand.

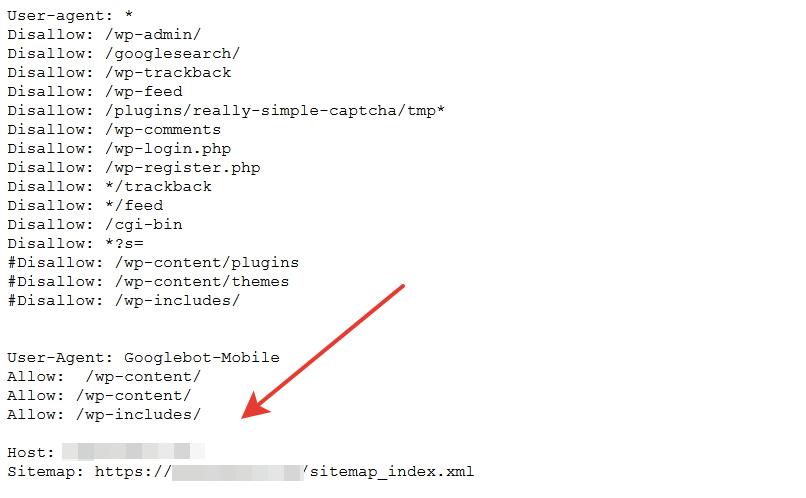

One more important thing: A robots.txt file is one of the possible locations for your sitemap.xml file. It should be placed after User-agent, Disallow, Allow, and Host commands. Like this:

Note: You can also add your robots.txt file manually to Google Search Console and, in case you target Bing, Bing Webmaster Tools. This is a much safer approach as by doing this, you protect your content from copying by webmasters of competitor sites.

Even though robots.txt structure and settings are pretty straightforward, a properly set up file can either make or break your SEO campaign. Be careful with settings: You can easily “disallow” your entire site by mistake and then wait for traffic and customers to no avail.

What Are Meta Robots Tags?

Meta robots tags (REP tags) are elements of an indexer directive that tell search engine spiders how to crawl and index specific pages on your website. They enable SEO professionals to target individual pages and instruct crawlers with what to follow and what not to follow.

How to Use Meta Robots Tags?

Meta robots tags are pretty simple to use.

First, there aren’t many REP tags. There are only four major tag parameters:

- Follow

- Index

- Nofollow

- Noindex

Second, it doesn’t take much time to set up meta robots tags. In four simple steps, you can take your website indexation process up a level:

- Access the code of a page by pressing CTRL + U.

- Copy and paste the part of a page’s code into a separate document.

- Provide step-by-step guidelines to developers using this document. Focus on how, where, and which meta robots tags to inject into the code.

- Check to make sure the developer has implemented the tags correctly. I recommend using The Screaming Frog SEO Spider to do so.

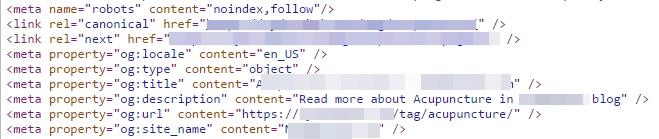

The screenshot below demonstrates what meta robots tags may look like (check out the first line of code):

Third, meta robots tags are recognized by major search engines: Google, Bing, Yahoo, and Yandex. You don’t have to tweak the code for each individual search engine or browser (unless they honor specific tags).

Main Meta Robots Tags Parameters

As I mentioned above, there are four main REP tag parameters: follow, index, nofollow, and noindex. Here’s how you can use them:

- index, follow: allow search bots to index a page and follow its links

- noindex, nofollow: prevent search bots from indexing a page and following its links

- index, nofollow: allow search engines to index a page but hide its links from search spiders

- noindex, follow: exclude a page from search but allow following its links (link juice helps increase SERPs)

REP tag parameters vary. Here are some of the rarely used ones:

- none

- noarchive

- nosnippet

- unavailabe_after

- noimageindex

- nocache

- noodp

- notranslate

Meta robots tags are essential if you need to optimize specific pages. Just access the code and instruct developers on what to do.

If your site runs on an advanced CMS (OpenCart, PrestaShop) or uses specific plugins (like WP Yoast), you can also inject meta tags and their parameters straight into page templates. This allows you to cover multiple pages at once without having to ask developers for help.

Basic Rules for Setting Up Robots.txt and Meta Robots Tags

Knowing how to set up and use a robots.txt file and meta robots tags is extremely important. A single mistake can spell death for your entire campaign.

I personally know several digital marketers who have spent months doing SEO only to realize that their websites were closed from indexation in robots.txt. Others abused the “nofollow” tag so much that they lost backlinks in droves.

Dealing with robots.txt files and REP tags is pretty technical, which can potentially lead to many mistakes. Fortunately, there are several basic rules that will help you implement them successfully.

Robots.txt

- Place your robots.txt file in the top-level directory of your website code to simplify crawling and indexing.

- Structure your robots.txt properly, like this: User-agent → Disallow → Allow → Host → Sitemap. This way, search engine spiders access categories and web pages in the appropriate order.

- Make sure that every URL you want to “Allow:” or “Disallow:” is placed on an individual line. If several URLs appear on one single line, crawlers will have a problem accessing them.

- Use lowercase to name your robots.txt. Having “robots.txt” is always better than “Robots.TXT.” Also, file names are case sensitive.

- Don’t separate query parameters with spacing. For instance, a line query like this “/cars/ /audi/” would cause mistakes in the robots.txt file.

- Don’t use any special characters except * and $. Other characters aren’t recognized.

- Create separate robots.txt files for different subdomains. For example, “hubspot.com” and “blog.hubspot.com” have individual files with directory- and page-specific directives.

- Use # to leave comments in your robots.txt file. Crawlers don’t honor lines with the # character.

- Don’t rely on robots.txt for security purposes. Use passwords and other security mechanisms to protect your site from hacking, scraping, and data fraud.

Meta Robots Tags

- Be case sensitive. Google and other search engines may recognize attributes, values, and parameters in both uppercase and lowercase, and you can switch between the two if you want. I strongly recommend that you stick to one option to improve code readability.

- Avoid multiple tags. By doing this, you’ll avoid conflicts in code. Use multiple values in your tag. Like this: .

- Don’t use conflicting meta tags to avoid indexing mistakes. For example, if you have several code lines with meta tags like this and this , only “nofollow” will be taken into account. This is because robots put restrictive values first.

Note: You can easily implement both robots.txt and meta robots tags on your site. However, be careful to avoid confusion between the two.

The basic rule here is, restrictive values take precedent. So, if you “allow” indexing of a specific page in a robots.txt file but accidentally “noindex” it in the , spiders won’t index the page.

Also, remember: If you want to give instructions specifically to Google, use the “googlebot” instead of “robots”. Like this: . It is similar to “robots” but avoids all the other search crawlers.

Conclusion

Search engine optimization is not only about keywords, links, and content. The technical part of SEO is also important. Actually, it can make the difference for your entire digital marketing campaign. Thus, learn how to properly use and set up robots.txt files and meta robots tags as soon as possible. I hope the practices and suggestions I describe in this article will guide you through the process smoothly.

Image Credits

Featured image: Rawpixel/DepositPhotos

Screenshots by Sergey Grybniak. Taken February 2017

ADVERTISEMENT

Go to Source

Author: Sergey Grybniak

The post Best Practices for Setting Up Meta Robots Tags and Robots.txt by @grybniak appeared first on On Page SEO Checker.

source http://www.onpageseochecker.com/best-practices-for-setting-up-meta-robots-tags-and-robots-txt-by-grybniak/

No comments:

Post a Comment