Every SEO professional knows that top search rankings require a properly researched strategy, optimized on-page and off-page SEO factors, engaging content, and long-lasting search value. In other words, breaking into the top ten on Google is by no means, easy.

Given that white hat SEO strategy takes a lot of time, some SEO practitioners still choose to cut corners when optimizing their pages.

For obvious reasons, I don’t recommend using any of the black hat SEO techniques. But it can be challenging to tell white hat SEO from its black hat variety, or draw a distinctive line between white hat and gray hat optimization.

Search engine optimization is constantly evolving, and some of the techniques that were once legitimate are strictly forbidden now. Let’s set the record straight.

Let’s dive into the six on-page optimization techniques that Google dislikes and could get your website penalized.

#1 Keyword Stuffing

Digital marketers today recognize the importance and value of content marketing. Several years ago, however, marketers could produce tons of thin content with zero value to push their way through to the top of search results.

Keyword stuffing (or keyword stacking) was one of the most common content generation methods due to its simple process:

- Research search terms you want to rank for (look for exact-match type keywords)

- Produce content with a focus on the topic but not too in-depth

- Stuff content with keywords (repeat the exact-match keywords and phrases frequently)

- Make sure that meta-tags are also stuffed with keywords

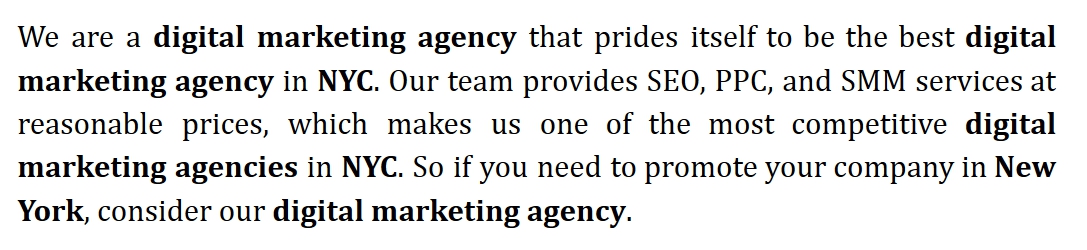

Like this:

See how one keyword phrase is repeated again and again?

Fortunately, Google Panda made that on-page optimization method obsolete in 2011.

The Google of 2017 favors meaningful and authentic content. It is smart enough to easily detect and penalize sites with thin, low quality, or plagiarized content so marketers should avoid stuffing their content with repetitive keywords.

Solution

Embrace a “reader’s first” approach. Develop a habit of creating content that really matters to your targeted audience. Keywords should always come second. Use your main keyword sparingly (2-3 times per 500 words) and include a couple of highly relevant and long-tail keywords to help crawlers identify the value of your content. To improve your marketing strategy, consider using a variety of keywords to get your content noticed.

#2 Spammy Footer Links and Tags

A footer is a must-have element for any website. It helps visitors navigate between multiple website sections and provides access to additional information such as contact info and a copyright license.

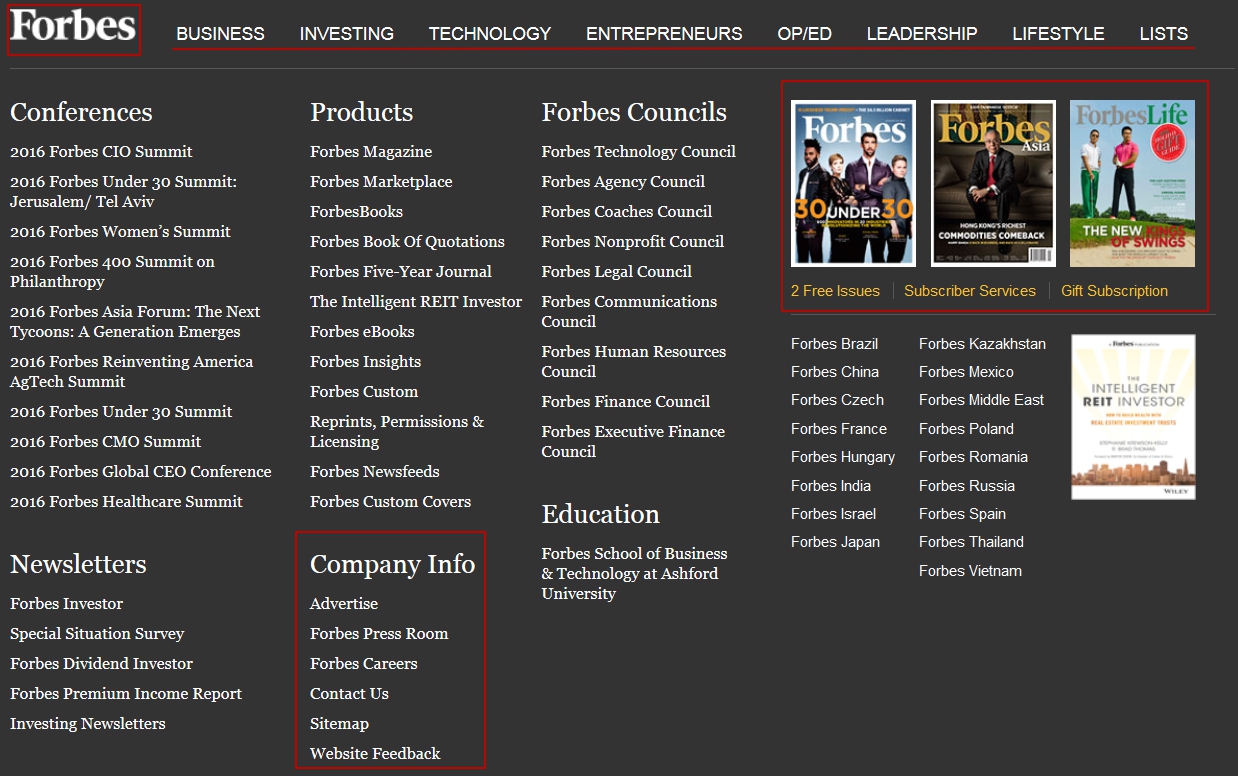

The example below demonstrates how useful an info-heavy footer can be:

Here we have a Forbes logo with tabs leading to the website’s main sections like Business or Technology, Featured Products, and Company Info. The footer also features multiple section headlines that allow users to easily access every part of the website.

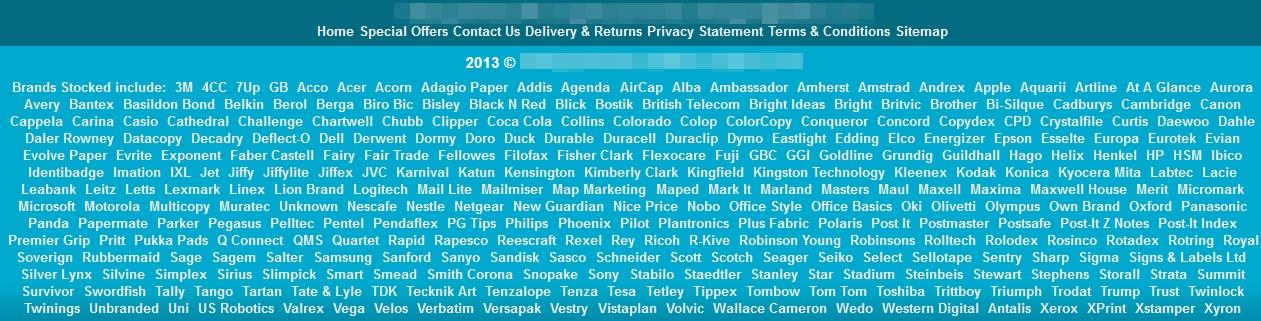

Let’s imagine, though, that a website’s footer is stuffed with hundreds of links and tags. How do you feel about the footer below?

Quite user-unfriendly, right?

It’s no wonder that websites relying on link- and tag-filled footers were penalized. They were hit by two algorithm updates: Panda, which targeted poor website structure, and Penguin, which flagged sites engaging in link and tag manipulation. Avoid spammy footers to achieve a higher search engine ranking position.

Solution

When you optimize a site, make sure it has a nice and clean footer that features vital data like contact information, address, working hours, terms of use, copyright license, navigation buttons, subscription field, and more. But spamming the footer is a big no-no.

#3 Cloaking

This old school SEO technique is rooted in the ability to display two separate pieces of content on a single webpage. The first text is “fed” to bots for further crawling, while the second one is showcased to actual readers.

What did cloaking accomplish?

- Manipulate search engines to receive higher SERPs

- Allow a specific page to rank high while remaining easy for users to read

- Deliver “unrelated content” for common requests (e.g. you search “cute little puppies” but end up on a porn site)

Cloaking is an advanced black hat SEO method. To harness its true power, you should be able to identify search engine spiders by IP and deliver different webpage content to them. This also requires abusing specific server-side scripts and “behind the scenes” code.

Cloaking is a tactic that was marked as “black hat” years ago. Google has never found it legitimate, but nonetheless, it is widely used in the so-called DeepWeb.

Solution

This one is simple: avoid cloaking. Don’t risk your reputation for a quick SERP ranking.

#4 Internal Linking With Keyword-rich Anchor Text

Internal linking is not always a bad thing. Ideally, linking allows you to connect web pages to create properly structured “paths” for search crawlers to index your site from A to Z. But marketers must walk a fine line when it comes to internal linking.

When you repeatedly link your site’s inner pages with keyword-rich anchor texts, this raises a big red flag to Google. You can risk being hit with an over-optimization penalty.

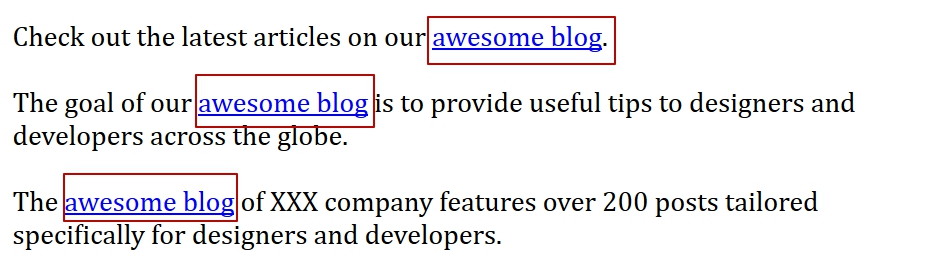

Once, keyword-rich anchor texts were the holy grail of SEO. All you had to do to significantly improve a page’s search engine position was to link it to other pages using similar keyword-rich anchor texts. Basically, you would have one URL and several keyword variations to use in anchor texts, like this:

The three sentences from the example above are located on different pages but feature one URL and similar anchor text. This can negatively impact your website and result in a number of harmful penalties from Google.

Solution

Google puts value first. Make sure the word “value” dominates your content creation process as well. The same is true for links: place them only where they bring real value to users. And don’t forget to use different anchor texts for your inner links.

#5 Dedicated Pages for Every Keyword Variant

For a long time, keywords played a critical role in how your pages ranked. Though their importance is on the decline, keywords are by no means dead.

These days, you can’t stuff your site with multiple variations of targeted keywords to boost rankings. Instead, focus on:

- Proper keyword selection

- Topic and context

- User intent

You must be careful and avoid over-optimizing your websites. Remember: Panda, Hummingbird, and RankBrain are always looking for sites that are abusing keywords.

In old-school SEO practices, over-optimization was a must. In addition, acquiring a high search engine ranking position required the creation of specific pages for every keyword variant.

Suppose you wanted to rank your website that sold “custom rings.” Your win-win strategy would be to create dedicated pages for “custom unique rings,” “custom personalized rings,” custom design rings,” and every other variation involving the words “custom” and “rings.”

The idea was pretty straightforward: marketers would target all keywords individually to dominate search by every keyword variation. You sacrifice usability but drive tons of traffic.

This on-page SEO method was 100% legitimate several years ago but if you were to try this strategy today, you run the risk of receiving a manual action penalty.

Solution

To avoid a manual action penalty, don’t create separate pages for each particular keyword variant. Google’s “rules” for calculating SERPs have significantly changed, rendering old-time strategies obsolete. Utilizing the advanced power of its search algorithms and RankBrain, Google now prioritizes sites that bring actual value to users.

Your best strategy to reach the top is to create visually and structurally appealing landing pages for your products and services, post high-quality, SEO-optimized content in your blog, and build a loyal following on social media platforms.

#6 Content Swapping

Google has been a powerhouse for more than a decade. They employed thousands of people and invested billions of dollars into their research and development departments, but their search engine lagged in intelligence for several years.

SEO strategies previously could easily trick Google. For example, content swapping was one way to manipulate Google’s algorithms and indexing protocols.

It worked like this:

- Post a piece of content on a site

- Wait until Google bots crawl and index it

- Make sure that a site is displayed in the search

- Close a page or entire site from indexation

- Swap content

This would result in a page that originally featured an article about tobacco pipes but ended up with swapped content on prohibited medications or banned substances.

Content swapping has always been forbidden, but advanced black hat SEO professionals used the technique because Google wasn’t quick enough to reindex sites.

Now with changes in the SEO landscape, Google can almost immediately cut the search ranking of any site that’s closed from indexation. This is exactly why content swapping is no longer a viable strategy as it can result in significant penalties.

Solution

Content is king. If you want to hit Google’s top, prioritize creating dense content that provides as much information as possible and adds value to your audience. Google hates sites that manipulate content, so stay away from content swapping.

Conclusion

For better or worse, on-page SEO never stays still. Launching one algorithm update after another, Google is constantly pushing SEO experts and digital marketers to find new opportunities to improve their rankings via organic search.

Google drives innovation forward, making some of the most popular on-page optimization techniques of the past outdated. No longer can marketers rely on keyword stuffing, thin content, and the myriad of other grey and black hat on-page SEO methods.

SEO strategies of the past were technical and manipulative but lacked long-term sustainability. A true SEO pro could easily trick Google by stuffing content with keywords and paid links. The only problem was, it didn’t bring any real value to customers.

Do you need to deliver value? Regardless of where your website currently ranks, every company can benefit from an updated SEO strategy. The key is to avoid using the SEO techniques described above and to pursue legal, white hat SEO tactics in the future.

Image Credits

Featured Image: erikdegraaf/DepositPhotos

Screenshots by Sergey Grybniak. Taken February 2017.

ADVERTISEMENT

Go to Source

Author: Sergey Grybniak

The post Old-School SEO: 6 On-Page Optimization Techniques that Google Hates by @grybniak appeared first on On Page SEO Checker.

source http://www.onpageseochecker.com/old-school-seo-6-on-page-optimization-techniques-that-google-hates-by-grybniak/

No comments:

Post a Comment