When you hear the words “search engine optimization,” what do you think of? My mind leaps straight to a list of SEO ranking factors, such as proper tags, relevant keywords, a clean sitemap, great design elements, and a steady stream of high-quality content.

However, a recent article by my colleague, Yauhen Khutarniuk, made me realize that I should be adding “crawl budget” to my list. While many SEO experts overlook crawl budget because it’s not very well understood, Khutarniuk brings some compelling evidence to the table – which I’ll come back to later in this post – that crawl budget can, and should, be optimized.

This made me wonder: how does crawl budget optimization overlap with SEO, and what can websites do to improve their crawl rate?

First Things First – What Is a Crawl Budget?

Web services and search engines use web crawler bots, aka “spiders,” to crawl web pages, collect information about them, and add them to their index. These spiders also detect links on the pages they visit and attempt to crawl these new pages too.

Examples of bots that you’re probably familiar with include Googlebot, which discovers new pages and adds them to the Google Index, or Bingbot, Microsoft’s equivalent. Most SEO tools and other web services also rely on spiders to gather information. For example, my company’s backlink index, WebMeUp, is built using a spider called BLEXBot, which crawls up to 15 billion pages daily gathering backlink data.

The number of times a search engine spider crawls your website in a given time allotment is what we call your “crawl budget.” So if Googlebot hits your site 32 times per day, we can say that your typical Google crawl budget is approximately 960 per month.

You can use tools such as Google Search Console and Bing Webmaster Tools to figure out your website’s approximate crawl budget. Just log in to Crawl > Crawl Stats to see the average number of pages crawled per day.

Is Crawl Budget Optimization the Same as SEO?

Yes – and no. While both types of optimization aim to make your page more visible and may impact your SERPs, SEO places a heavier emphasis on user experience, while spider optimization is entirely about appealing to bots. As Neil Patel says on KISSmetrics:

“Search engine optimization is focused more upon the process of optimizing for user’s queries. Googlebot optimization is focused upon how Google’s crawler accesses your site.”

So how do you optimize your crawl budget specifically? I’ve gathered the following nine tips to help you make your website as crawlable as possible.

How to Optimize Your Crawl Budget

1. Ensure Your Pages Are Crawlable

Your page is crawlable if search engine spiders can find and follow links within your website, so you’ll have to configure your .htaccess and robots.txt so that they don’t block your site’s critical pages. You may also want to provide text versions of pages that rely heavily on rich media files, such as Flash and Silverlight.

Of course, the opposite is true if you do want to prevent a page from showing up in search results. However, it’s not enough to simply set your Robots.txt to “Disallow,” if you want to stop a page from being indexed. According to Google: “Robots.txt Disallow does not guarantee that a page will not appear in results.”

If external information (e.g. incoming links) continue to direct traffic to the page that you’ve disallowed, Google may decide the page is still relevant. In this case, you’ll need to manually block the page from being indexed by using the noindex robots meta tag or the X-Robots-Tag HTTP header.

- noindex meta tag: Place the following meta tag in the <head> section of your page to prevent most web crawlers from indexing your page:

noindex” />

- X-Robots-Tag: Place the following in your HTTP header response to tell crawlers not to index a page:

X-Robots-Tag: noindex

Note that if you use noindex meta tag or X-Robots-Tag, you should not disallow the page in robots.txt, The page must be crawled before the tag will be seen and obeyed.

2. Use Rich Media Files Cautiously

There was a time when Googlebot couldn’t crawl content like JavaScript, Flash, and HTML. Those times are mostly past (though Googlebot still struggles with Silverlight and some other files).

However, even if Google can read most of your rich media files, other search engines may not be able to, which means that you should use these files judiciously, and you probably want to avoid them entirely on the pages you want to be ranked.

You can find a full list of the files that Google can index here.

3. Avoid Redirect Chains

Each URL you redirect to wastes a little of your crawl budget. When your website has long redirect chains, i.e. a large number of 301 and 302 redirects in a row, spiders such as Googlebot may drop off before they reach your destination page, which means that page won’t be indexed. Best practice with redirects is to have as few as possible on your website, and no more than two in a row.

4. Fix Broken Links

When asked whether or not broken links affect web ranking, Google’s John Mueller once said:

If what Mueller says is true, this is one of the fundamental differences between SEO and Googlebot optimization, because it would mean that broken links do not play a substantial role in rankings, even though they greatly impede Googlebot’s ability to index and rank your website.

That said, you should take Mueller’s advice with a grain of salt – Google’s algorithm has improved substantially over the years, and anything that affects user experience is likely to impact SERPs.

5. Set Parameters on Dynamic URLs

Spiders treat dynamic URLs that lead to the same page as separate pages, which means you may be unnecessarily squandering your crawl budget. You can manage your URL parameters by going to your Google Search Console and clicking Crawl > Search Parameters. From here, you can let Googlebot know if your CMS adds parameters to your URLs that doesn’t change a page’s content.

6. Clean Up Your Sitemap

XML sitemaps help both your users and spider bots alike, by making your content better organized and easier to find. Try to keep your sitemap up-to-date and purge it of any clutter that may harm your site’s usability, including 400-level pages, unnecessary redirects, non-canonical pages, and blocked pages.

The easiest way to clean up your sitemap is to use a tool like Website Auditor (disclaimer: my tool). You can use Website Auditor’s XML sitemap generator to create a clean sitemap that excludes all pages blocked from indexing. Plus, by going to Site Audit, you can easily find and fix all 4xx status pages, 301 and 302 redirects, and non-canonical pages.

7. Make Use of Feeds

Feeds, such as RSS, XML, and Atom, allow websites to deliver content to users even when they’re not browsing your website. This allows users to subscribe to their favorite sites and receive regular updates whenever new content is published.

While RSS feeds have long been a good way to boost your readership and engagement, they’re also among the most visited sites by Googlebot. When your website receives an update (e.g. new products, blog post, website update, etc.) submit it to Google’s Feed Burner so that you’re sure it’s properly indexed.

8. Build External Links

Link building is still a hot topic – and I doubt it’s going away anytime soon. As SEJ’s Anna Crowe elegantly put it:

“Cultivating relationships online, discovering new communities, building brand value – these small victories should already be imprints on your link-planning process. While there are distinct elements of link building that are now so 1990s, the human need to connect with others will never change.”

Now, in addition to Crowe’s excellent point, we also have evidence from Yauhen Khutarniuk’s experiment that external links closely correlate with the number of spider visits your website receives.

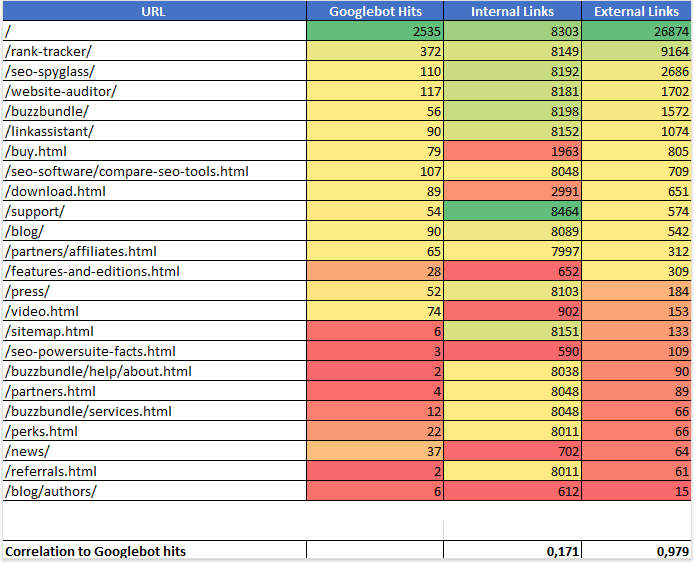

In his experiment, he used our tools to measure all of the internal and external links pointing to every page on 11 different sites. He then analyzed crawl stats on each page and compared the results. This is an example of what he found on just one of the sites he analyzed:

While the data set couldn’t prove any conclusive connection between internal links and crawl rate, Khutarniuk did find an overall “strong correlation (0,978) between the number of spider visits and the number of external links.”

9. Maintain Internal Link Integrity

While Khutarniuk’s experiment proved that internal link building doesn’t play a substantial role in crawl rate, that doesn’t mean you can disregard it altogether. A well-maintained site structure makes your content easily discoverable by search bots without wasting your crawl budget.

A well-organized internal linking structure may also improve user experience – especially if users can reach any area of your website within three clicks. Making everything more easily accessible in general means visitors will linger longer, which may improve your SERPs.

Conclusion: Does Crawl Budget Matter?

By now, you’ve probably noticed a trend in this article – the best-practice advice that improves your crawlability tends to improve your searchability as well. So if you’re wondering whether or not crawl budget optimization is important for your website, the answer is YES – and it will probably go hand-in-hand with your SEO efforts anyway.

Put simply, when you make it easier for Google to discover and index your website, you’ll enjoy more crawls, which means faster updates when you publish new content. You’ll also improve overall user experience, which improves visibility, which ultimately results in better SERPs rankings.

Image credits:

All screenshots by Aleh Barysevich. Taken September 2016.

Go to Source

Author: Aleh Barysevich

The post 9 Tips to Optimize Crawl Budget for SEO by @ab80 appeared first on On Page SEO Checker.

source http://www.onpageseochecker.com/9-tips-to-optimize-crawl-budget-for-seo-by-ab80/

This comment has been removed by the author.

ReplyDelete